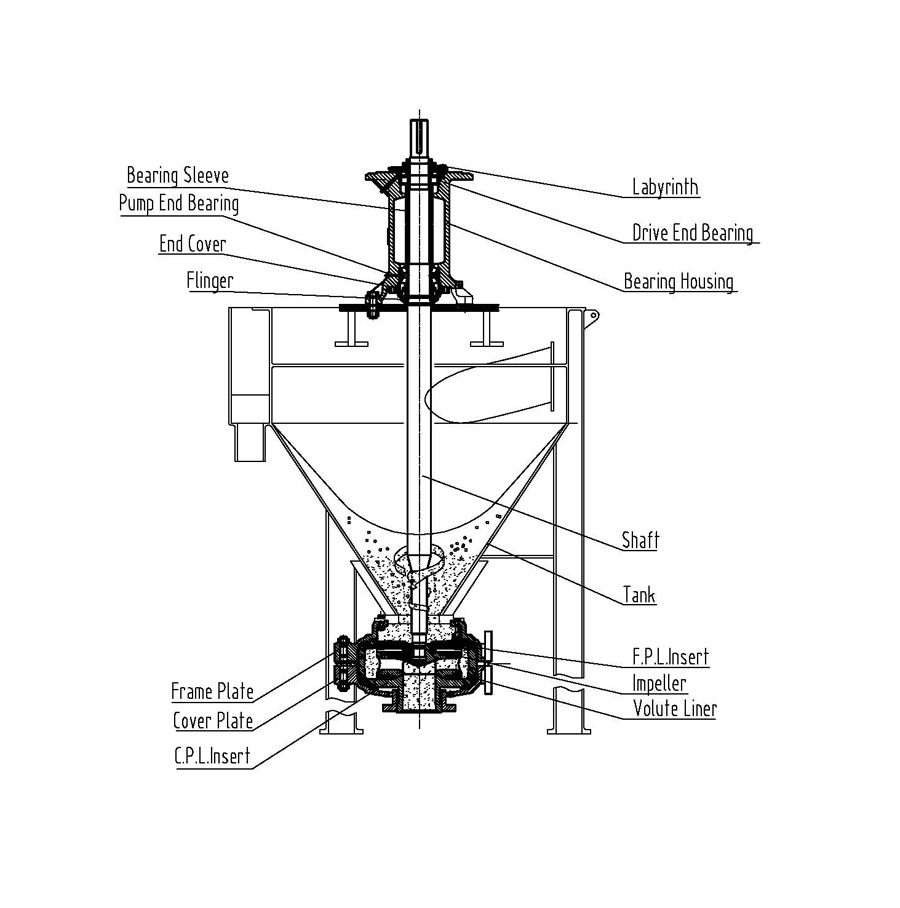

Foam Pump is typically used to convey abrasive or corrosive frothy slurries. It is including High Efficiency Froth Handling Froth Pump, Heavy Duty Fine Tailing Handling Froth Pump, Centrifugal Oil Sand Handling Froth Pump. A typical feature of this vertical Centrifugal Slurry Pump is the double-casing design which is centerlined splited. This wet parts of vertial froth slurry pumps are replaceable and made of abrasion resistant high chromium. Because of its special design of the Impeller, this forth slurry pump is widely used in the copper and gold mining as the flotation, mineral concentration and oil sand.

Foam Pump,Centrifugal Foam Pump,Sand Handling Froth Pump,Tailing Handling Froth Pump

SHIJIAZHUANG MUYUAN INDUSTRY & TRADE CO., LTD. , https://www.cnmuyuan.com